نمونه کد قابل استفاده

· خواندن 3 دقیقه

دانلود دیتا و نصب پکیج ها

کتابخانه های مجاز و قابل استفاده در مسابقه می توانید از کتابخانه های نصب شده در گوگل کولب استفاده کنید به علاوه کتابخانه هایی مثل SimpleITK و pydicom استفاده کنید.

میتوانید کتابخانه های قابل استفاده و ورژن آن ها را از این ریپازیتوری دریافت کنید.

https://docs.iaaa.ai

pip install SimpleITK pydicom

git clone https://github.com/iAAA-event/iAAA-MRI-Challenge.git

unzip /content/iAAA-MRI-Challenge/MRI_Labeling_normal_abnormal_SPI_p0_s0.zip -d ~/data

آماده سازی داده

تنظیمات مسیرها

https://docs.iaaa.ai

from pathlib import Path

ROOT_DATA_DIR = Path('~/data/MRI_Labeling_normal_abnormal_SPI_p0_s0').expanduser().absolute()

DATA_DIR = ROOT_DATA_DIR / 'data'

LABELS_PATH = ROOT_DATA_DIR / 'labels.csv'

PREPARED_DATA_DIR = Path('~/prepared_data').expanduser().absolute()

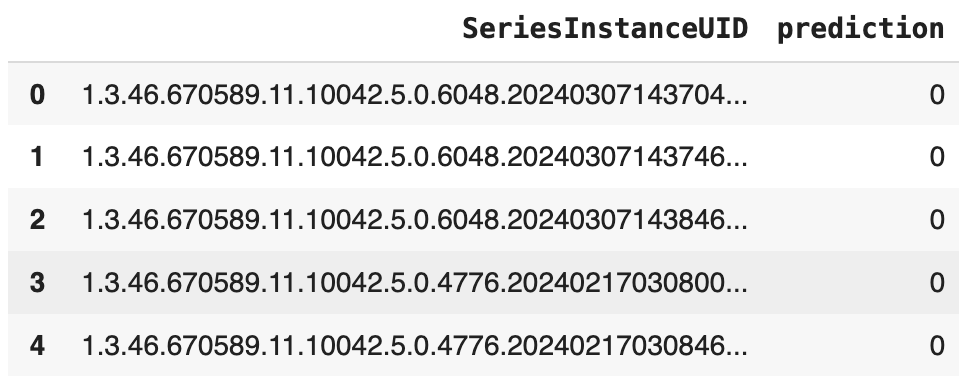

متادیتای لیبل ها

https://docs.iaaa.ai

import pandas as pd

annotations = pd.read_csv(LABELS_PATH).drop(['normal', 'corrupted'], axis=1)

annotations.rename(

{

"abnormal": "prediction"

},

axis=1,

inplace=True

)

print(annotations.head(5))

ساخت دیتاست

https://docs.iaaa.ai

import tensorflow as tf

from tqdm import tqdm

def prepare_data(df: pd.DataFrame, split: str, target_h: int, target_w: int, expected_num_slices: int):

prepared_data_dir_for_split = PREPARED_DATA_DIR / split

prepared_data_dir_for_split.mkdir(parents=True, exist_ok=True)

prepared_data_paths = list()

for ind, row in tqdm(df.iterrows()):

siuid = row['SeriesInstanceUID']

study_path = DATA_DIR / siuid

series = read_dicom_series(study_path)

windowed_series = apply_windowing_using_header_on_series(series['array'], series['headers'])

if (windowed_series.shape[1] != target_h) or (windowed_series.shape[2] != target_w):

arr = np.squeeze(tf.image.resize_with_pad(np.expand_dims(windowed_series, axis=-1), target_h, target_w))

else:

arr = windowed_series

if len(arr) != 16:

remainder = len(arr) - 16

arr = arr[int(remainder / 2): 16 + int(remainder / 2)]

prepared_path = prepared_data_dir_for_split / f'{siuid}.npy'

with open(prepared_path, 'wb') as f:

np.save(f, arr)

prepared_data_paths.append(prepared_path)

df['prepared_path'] = prepared_data_paths

expected_num_slices = 16

target_h = 256

target_w = 256

prepare_data(training_df, 'training', target_h, target_w, expected_num_slices)

prepare_data(validation_df, 'validation', target_h, target_w, expected_num_slices)

def read_npy_file(item):

data = np.load(item.decode())

return np.expand_dims(data.astype(np.float32), axis=-1)

def create_ds(df: pd.DataFrame, batch_size: int) -> tf.data.Dataset:

paths = [str(i) for i in df['prepared_path']]

volumes = tf.data.Dataset.from_tensor_slices(paths).map(lambda item: tf.numpy_function(read_npy_file, [item], [tf.float32,])[0])

labels = tf.data.Dataset.from_tensor_slices(df['prediction'].values)

zipped = tf.data.Dataset.zip(volumes, labels)

ds = zipped.shuffle(len(paths), seed=7).batch(batch_size).repeat()

return ds

BATCH_SIZE = 4

training_ds = create_ds(training_df, BATCH_SIZE)

n_iter_training = (len(training_df) // BATCH_SIZE) + int((len(training_df) % BATCH_SIZE) > 0)

validation_ds = create_ds(validation_df, BATCH_SIZE)

n_iter_validation = (len(validation_df) // BATCH_SIZE) + int((len(validation_df) % BATCH_SIZE) > 0)

tr_gen = training_ds.as_numpy_iterator()

val_gen = validation_ds.as_numpy_iterator()

مدل هوش مصنوعی

ساخت مدل

https://docs.iaaa.ai

import tensorflow.keras.layers as layers

import tensorflow.keras as keras

def create_model(expected_num_slices: int, target_h: int, target_w: int) -> keras.Model:

inputs = keras.Input((expected_num_slices, target_h, target_w, 1))

x = layers.Conv3D(filters=64, kernel_size=3, padding='same', activation="relu")(inputs)

x = layers.MaxPool3D(pool_size=2)(x)

x = layers.BatchNormalization()(x)

x = layers.Conv3D(filters=64, kernel_size=3, padding='same', activation="relu")(x)

x = layers.MaxPool3D(pool_size=2)(x)

x = layers.BatchNormalization()(x)

x = layers.Conv3D(filters=128, kernel_size=3, padding='same', activation="relu")(x)

x = layers.MaxPool3D(pool_size=2)(x)

x = layers.BatchNormalization()(x)

x = layers.Conv3D(filters=256, kernel_size=3, padding='same', activation="relu")(x)

x = layers.MaxPool3D(pool_size=2)(x)

x = layers.BatchNormalization()(x)

x = layers.GlobalAveragePooling3D()(x)

x = layers.Dense(units=512, activation="relu")(x)

x = layers.Dropout(0.3)(x)

outputs = layers.Dense(units=1, activation="sigmoid")(x)

model = keras.Model(inputs, outputs)

return model

expected_num_slices = 16

target_h = 256

target_w = 256

model = create_model(expected_num_slices, target_h, target_w)

metrics = [

keras.metrics.SensitivityAtSpecificity(0.95),

keras.metrics.SpecificityAtSensitivity(0.95),

keras.metrics.AUC(name='auc')

]

loss = keras.losses.BinaryCrossentropy()

optimizer = keras.optimizers.Adam(0.001)

model.compile(loss=loss, metrics=metrics, optimizer=optimizer)

model.summary()

آموزش مدل

https://docs.iaaa.ai

checkpoints_dir = Path('checkpoints')

checkpoints_dir.mkdir(exist_ok=True)

to_track = 'val_auc'

checkpoint_path = str(checkpoints_dir) + "/sm-{epoch:04d}" + "-{" + to_track + ":4.5f}"

callbacks = [

keras.callbacks.ModelCheckpoint(filepath=checkpoint_path, save_best_only=False, save_weights_only=False)

]

history = model.fit(

training_ds,

steps_per_epoch=n_iter_training,

validation_data=validation_ds,

validation_steps=n_iter_validation,

epochs=10,

callbacks=callbacks

)